Elon Musk’s pending takeover of Twitter and apparent commitment to free speech has spotlighted the potential flaws of an absolutist approach to overseeing media content. His opposition to “censorship that goes far beyond the law” implies a potential softening of content moderation on the platform that might lead to a rollback of common standards, such as the prohibition of hate speech or labeling of false information. The rise of disinformation – false information disseminated intentionally that may cause social harm – has clear costs that may be exacerbated with lighter oversight from popular platforms like Twitter.

Evidence of the impact of disinformation on the prevalence of false belief is mounting. A Kaiser Family Foundation report found that nearly 8 in 10 survey respondents in the United States believed at least one falsehood about COVID-19 or the vaccines against it. In this case, disinformation is leading to a direct assault on public health with deadly consequences for thousands. False information is also one of the greatest threats to democratic governments around the world, as interference in elections amounts to an egregious attack on the human right to freedom of opinion.

Disinformation has also led to a paradox of mistrust in media. Disinformation campaigns are so effective at discrediting scientists, journalists, and others, that adherence to ethical standards supporting a greater likelihood of truth increasingly bears little relationship to whether the public will believe the information presented. In fact, the degree of truth may be inversely related to popular believability in some cases – research suggests conspiracy theories and other false news may trigger a greater emotional response than true stories, enabling their spread. Indeed, surveys indicate that trust in traditional media outlets is hitting new lows, with greater numbers viewing the media as more focused on supporting a political ideology than providing real information.

1. Free Expression Blocked in Thailand

New Regulation Is Likely, But Effects May Be Limited

Disinformation is a scourge for most countries. However, where enforcement of the freedom of expression is strongest, governments are hampered in their ability to combat disinformation by the tension between the right to freedom of expression and the right to freedom of opinion. These rights are so closely intertwined that they are enshrined together in article 19 of the Universal Declaration of Human Rights, which guarantees to everyone the “freedom to hold opinions without interference [emphasis added] and to seek, receive and impart information and ideas through any media and regardless of frontiers.”

While disinformation is detrimental to freedom of opinion, measures to prevent it can unduly restrict freedom of expression. This tension hinders the ability of democratic governments to respond to disinformation. For example, France passed a law in 2018 aimed at false information during electoral cycles that had the unintentional consequence of Twitter not allowing government-sponsored ads encouraging citizens to vote.

2. Internet Access Restricted in the Democratic Republic of Congo

The European Commission introduced a pair of regulatory proposals at the end of 2020 aimed at modernizing its 20-year-old oversight of digital services. One of the proposals, the Digital Services Act (DSA), aimed at curbing illegal activity and the spread of disinformation, is likely to be finalized this year and take effect as early as 2023 for the very largest online platforms and 2024 for all other covered companies. The DSA would impose additional regulation of content moderation.

Extra requirements would be imposed by the DSA upon the largest online platforms (those with at least 45 million average monthly European users). These would likely apply to only a handful of companies initially: Meta Platforms, Alphabet, Twitter, and ByteDance (TikTok). Such companies would be required to conduct annual assessments of the societal risks associated with the use of their services. The largest online platforms would also have to provide information in their terms and conditions on the algorithms used to recommend information to their users, and to provide the option for users to have their content recommendations not based on profiling. Where display advertising occurs, the largest platforms need to provide public access to data on their targeted advertisements, including their contents, the advertising source, the period displayed, and the parameters used for targeting.

Public disclosure of the parameters of algorithms that recommend content and target advertisements may have the unintended consequence of enabling bad actors to easily manipulate them, including in the effort to spread false information. Researchers at the University of Oxford found that the number of countries where organized manipulation of public opinion through social media took place increased from 70 countries in 2019 to 81 countries in 2020. Well-funded state actors are the predominant force behind disinformation campaigns, which are intended to sow discord and distrust within societies to gain political advantage. Further, private actors involved in spreading falsehoods, such as political campaigns, activists, and advertising firms, are quite sophisticated as well.

In the US, bills such as the Health Misinformation Act take a different approach by aiming at curtailing the liability protections that online platforms have for certain types of hosted content. Even if such a blunt tool becomes law (and survives court challenges), it may serve only to entrench the dominance of those largest online platforms able to absorb potential liability and counteract other potential antidotes to disinformation such as media diversity.

Best Practices to Rebuild Trust

Engaged investors may be best positioned to ensure a balance of freedoms given the limited potential of legislative solutions. The encouragement of best practices may at least mitigate the worst effects of disinformation stemming from the business activities of media companies. These best practices include the following:

- Responsible oversight of user content and conduct.

- Commitment to content diversity.

- Editorial independence and guidelines.

- Contributions to media literacy.

- Responsible marketing practices.

A complete corporate response to disinformation requires conducting a human rights impact assessment and establishing due diligence processes that go beyond content moderation and encompass the entirety of a company’s operations, business model, and product design. Authoritative guidance such as the United Nations Guiding Principles on Business and Human Rights establish expectations regarding responsible business conduct that can aid companies in this effort.

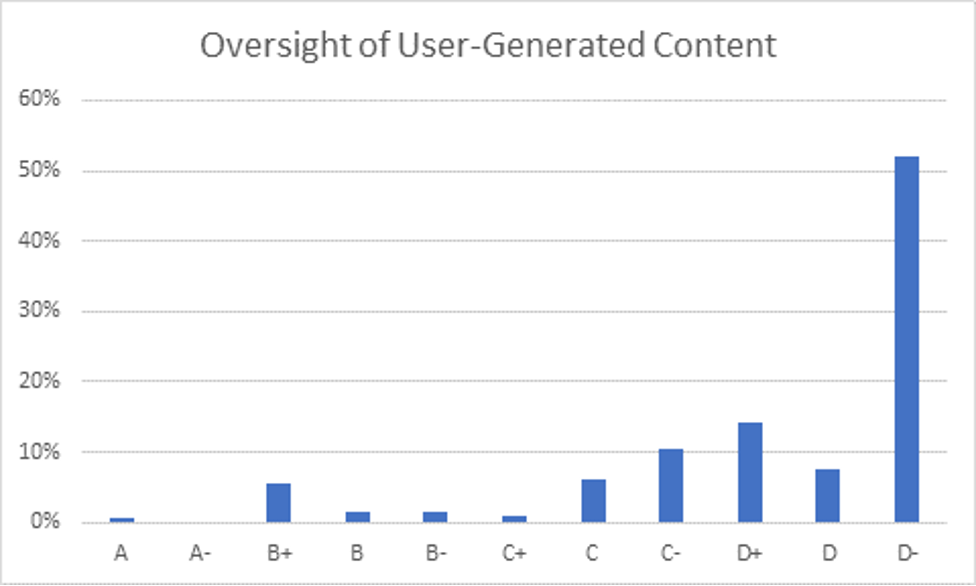

Responsible Oversight of User Content and Conduct

The responsible oversight of user content and conduct has over the years developed into a key necessity in media. Many social media platforms have already made substantial efforts at content moderation, including providing reporting channels, removing inappropriate or abusive content, detecting and removing fake accounts, flagging false information, and raising awareness for users. Unfortunately, these actions seem largely ineffective – the social media companies ISS ESG rates highest for oversight of user-generated content, such as Twitter and Meta Platforms, face some of the greatest challenges with disinformation. Overall, an analysis of ISS ESG’s ratings universe shows that social media companies’ performance on this topic is still lagging, with only 9% of the rated companies achieving a C+ or higher.

Figure 1: Social Media Platforms’ Performance on Responsible Oversight

Source: ISS ESG Corporate Rating

According to ISS ESG’s Norm-Based Research data, efforts by social media platforms to remediate disinformation often fail to prevent its reoccurrence. Even when it comes to incitement to discrimination, hostility, and violence, practices clearly prohibited in international law, companies’ responses may not be thorough enough.

3. Hate Speech on Facebook?

Most often, controversies related to freedom of expression are due to a disproportionate response to what is regarded as false information, due to problematic government policies that media companies are forced to uphold.

4. Censorship in China

Commitment to Content Diversity

Unlike the content moderation practices of social media companies focused on reducing the diet of disinformation, a diversity of content approach may reduce the effect of false information by providing content recommendations (or offerings from traditional media) that supplement existing preferences with a broader set of perspectives. A 2021 UN report on combatting disinformation in a manner consistent with upholding human rights highlights the need to “enhance the role of free, independent and diverse media.”

Such a description may be best applied to public service media organizations. Although public funding does not guarantee independence from government interference, it theoretically creates a platform to serve the national population that funds it, whether through fees, taxes, or donations. In many cases, such as with the BBC in the United Kingdom and the Corporation for Public Broadcasting (CPB) in the United States, public media are required to serve diverse communities within their borders, necessitating a wider range of programming than is typical of commercial entities.

A commitment to distributing diverse content is applicable to social media platforms as well as traditional forms of media. The recommendation algorithms of Twitter, Facebook, Instagram, and others are designed to maximize engagement, but not without constraints. Facebook’s guidelines, for example, make certain verified false or misleading content (such as misinformation about COVID-19 vaccines) ineligible for recommendation even when it is not otherwise removed from the platform. The further step of incorporating diversity measures, such as by source, viewpoint, category, etc., in recommendation engines may serve to enhance the ability of users to form better-informed opinions.

Editorial Independence and Guidelines

The fight against disinformation also requires content-producing media companies to establish solid editorial policies, as well as thorough review processes to ensure the integrity of produced content. Although most traditional media companies don’t originate false information, some are susceptible to incorporating it into their content. Media companies must take care to guarantee the independence of editorial decision making from commercial, political, or other interests (e.g., advertising customers).

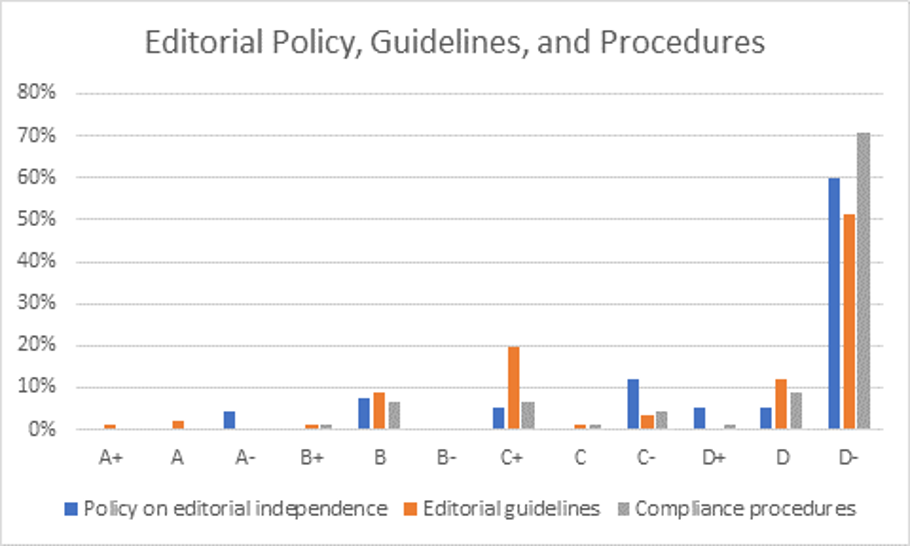

Although ISS ESG awards many companies high grades for their policies regarding editorial independence and guidelines for carrying out their policies, fewer companies disclose information on having relevant compliance procedures in place. These discrepancies outline a major issue in companies’ performance on ESG topics: they are often better at talking the talk than walking the walk. Thorough compliance measures are critical to ensuring the effectiveness of editorial guidelines in fighting the spread of disinformation.

Figure 2: Companies’ Performance on Compliance Measures for Editorial Responsibility

Source: ISS ESG Corporate Rating

Contributions to Media Literacy

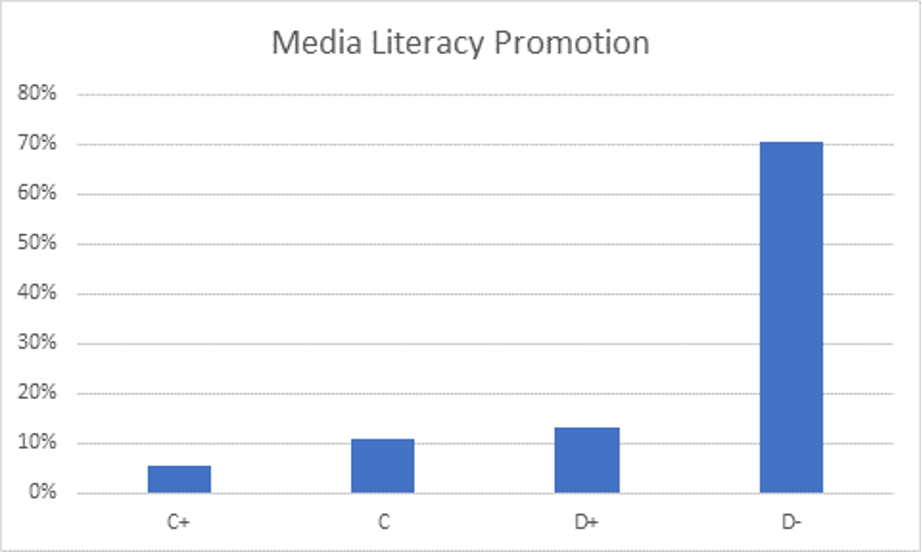

Another best practice that media companies can leverage to cope with false information is the improvement of media literacy among their audiences. Sixty percent of the world’s population uses the internet on a regular basis. Media literacy –the ability to read, understand, and assess information – is lagging far behind, however. Improving media and information literacy enables consumers to critically evaluate content and build resilience to false reports. Some media companies already take steps to label misleading content, but very few engage in awareness campaigns and educational programs to develop such competencies within their audiences.

ISS ESG’s analysis suggests that most commercial media companies do not view promoting media literacy as their responsibility, instead leaving it to formal education systems. None of the rated entities in our universe receive higher than a C+ grade for their efforts. Indeed, this topic is rarely addressed within company disclosures, and when tackled often concerns only a slim portion of companies’ audience reach.

Figure 3. Companies’ Performance on Promoting Media Literacy

Source: ISS ESG Corporate Rating

Collective Effort and New Business Models May Ultimately Be Required

Efforts to encourage best practices may help restore trust in media, but a broad coalition is likely needed to deliver enduring results. Investors can encourage media companies to collectively partner with civil society and academic institutions in tackling disinformation. Initiatives range from creating tools to evaluate and benchmark online disinformation (such as the Global Disinformation Index), to developing new standards and advocating for policy (for example EU DisinfoLab). Joint efforts to inform potential new regulations can avoid the pitfalls of earlier legislation and help provide a more level playing field for businesses while addressing the harms that stem from a loss of trust in media.

Combatting disinformation may also require more fundamental changes to the business models of certain companies, particularly in social media. So long as these companies derive revenue from advertising, no degree of transparency (such as that proposed by the DSA) will alter the incentives to drive greater levels of user engagement and provide advertisers with better targeted audiences. Media companies with a reliance upon subscriptions or other revenue streams may be more able to withstand measures that detract from engagement or ad targeting (e.g., opt-outs from or opt-ins to interest-based ads), at least to some degree. Ironically, while Elon Musk’s plans for Twitter may reduce the platform’s direct check on disinformation, his focus on growing the company’s subscription business may ultimately remove the incentives for it to reach a wide audience.

Explore ISS ESG solutions mentioned in this report:

- Identify ESG risks and seize investment opportunities with the ISS ESG Corporate Rating.

- Assess companies’ adherence to international norms on human rights, labor standards, environmental protection and anti-corruption using ISS ESG Norm-Based Research.

By: Joe Arns, CFA, Associate Director, ISS ESG. Thiago Toste, Senior Associate, ESG Methodology Lead – Norm-Based Research, ISS ESG.