The EU Artificial Intelligence Act (AIA) was finalized in March 2024. The AIA builds upon and transforms the OECD AI Principles into regulation, including elements centered around human oversight and accountability, avoidance of discrimination, physical safety, data security, and transparency.

The AIA contains two important takeaways for investors. First, there is a spectrum of risk related to AI use. Regulators and the marketplace will likely attach greater risks to systems that threaten physical safety or privacy or that may lead to discrimination.

Second, among the array of legislative requirements in the AIA, a requirement that may deserve particular attention is quality management. Without a quality management system that overlays every aspect of product design, development, testing, deployment, and monitoring, it is difficult to put much stock in a company’s AI risk controls.

The Spectrum of Risk

The AIA classifies AI models into three different risk categories and sets their legal obligations accordingly. The highest-risk models, ‘unacceptable risk’ AI systems, are prohibited. These include models that use manipulative or deceptive techniques to alter a person’s behavior, social scoring based on social behavior or personal characteristics and biometric categorization systems, and untargeted scraping of facial images from the internet or closed-circuit television footage (i.e., remote biometric identification).

The set of prohibited activities contains exceptions for law enforcement and other government agencies and the scientific community that may lead to harms the law otherwise seeks to avoid. As an example, law enforcement exemptions for remote biometric identification create risks of human rights violations.

The line between prohibited unacceptable risk systems and the next category, ‘high risk’ systems, may not always be clear. The maximum fine for providing or deploying prohibited systems is 7% of annual sales, making this ambiguity a potential issue. For example, broadly construed, targeted digital advertising may be deemed manipulative.

High-risk AI systems include models used as safety components of products (e.g., advanced driver assistance systems) or applied in the following domains: critical infrastructure (e.g., utilities and traffic signals), education, workforce management, law enforcement, and administration of justice and democratic processes, as well as essential services (e.g., banking, insurance, healthcare, and emergency response).

For most investments, a company’s use of AI as a component of product safety is going to be the relevant determinant of ‘high risk’ under the AIA. As detailed in Actionable Insights: Top ESG Themes in 2024, ISS ESG has identified 21 ESG rating industries in which the use of AI in products and services may potentially carry higher risks. It expands upon the AIA’s focus on threats to physical safety and discrimination to also encompass privacy threats, the most common potential impact identified.

Table 1: Industries with Higher Potential AI Adoption Risks

| ESG Rating Industries | Potential Negative Impacts |

| Aerospace & Defense | Safety |

| Automobile | Privacy, safety |

| Commercial Banks & Capital Markets | Privacy, discrimination |

| Digital Finance & Payment Processing | Privacy, discrimination |

| Education Services | Privacy, discrimination |

| Electric Utilities | Privacy, safety |

| Electronic Devices & Appliances | Privacy, safety |

| Gas and Electricity Network Operators | Privacy, safety |

| Health Care Equipment and Supplies | Privacy, safety |

| Health Care Facilities and Services | Privacy, safety |

| Health Care Technology and Services | Privacy, safety |

| Human Resource & Employment Services | Privacy, discrimination |

| Insurance | Privacy, discrimination |

| Interactive Media & Online Consumer Services | Privacy, safety |

| Mortgage and Public Sector Finance | Privacy, discrimination |

| Multi-Utilities | Privacy, safety |

| Oil & Gas Storage & Pipelines | Safety |

| Public & Regional Banks | Privacy, discrimination |

| Software and Diversified IT Services | Privacy |

| Telecommunications | Privacy |

| Water and Waste Utilities | Safety |

Source: ISS ESG

AI systems classified as high-risk under the AIA will have to follow stringent obligations (Table 2). Failure to comply exposes violators to fines of up to 3% of revenue.

Table 2: EU AIA Requirements for High-Risk AI Systems

| Requirement | Providers | Deployers |

| Human oversight with necessary competence | ✓ | ✓ |

| Risk management system | ✓ | |

| Measures to ensure accuracy, robustness, and cybersecurity | ✓ | |

| Quality management system | ✓ | |

| Instructions of use to deployers | ✓ | |

| Log keeping | ✓ | ✓ |

| Technical documentation and registration | ✓ | |

| Conformity assessment procedure | ✓ | |

| Correction actions and notification | ✓ | |

| Ensure relevance of input data | ✓ | |

| Inform providers about observed risks | ✓ | |

| Inform people they are subject to AI decisions for certain uses | ✓ | |

| Transparency requirements for generative AI | ✓ | ✓ |

Source: EU Artificial Intelligence Act

For developers of AI systems, or AI ‘providers,’ this includes the need for a risk management system that assesses potential impacts when used as intended or when misused; measures to ensure accuracy, robustness, and cybersecurity; a quality management system; and instructions on appropriate use. Additionally, conformity with the AIA must be assessed prior to deployment.

Companies that use high-risk AI systems developed by third parties in their products or services, or AI ‘deployers,’ need to ensure that the input data is relevant for the model’s designed purpose; notify model providers of risks observed in use; and inform people when AI systems are used for certain decisions (e.g., hiring).

Both providers and deployers of high-risk AI systems need appropriate human oversight. Moreover, automated record keeping is required for traceability. To the extent the systems involve generative AI technology, additional transparency is required to make it clear to users when they are interacting with an AI system rather than a human. In addition, companies that deploy emotion recognition or biometric categorization systems (e.g., systems that determine political orientation based upon physical characteristics or behavior) must inform the people affected.

Providers of foundational models (e.g., Gemini) must also have a policy on complying with copyright law and keep a detailed summary of data used for training. Whether this will sufficiently address potential IP infringement that has already occurred or will occur prior to full implementation of the law is unclear.

General-purpose AI systems that are considered to pose systemic risks will have to adhere to stricter obligations, including assessing and mitigating such risks, reporting on serious incidents, conducting adversarial testing, and ensuring cybersecurity.

All other models would be considered non-high risk. These AI systems, such as chatbots, would need to fulfill minimal transparency regulations only.

From a liability perspective, it is important for investors to understand that ‘non-high-risk’ systems are not necessarily ‘no risk’ or even ‘low risk.’ Notably, the AIA does not incrementally address the intensified threat to privacy. Although other EU regulations cover data privacy and disinformation (e.g., General Data Protection Regulation, Digital Services Act), none comprehensively address the root of the privacy problem: too much data is collected in the first place.

Quality Management Systems Are Crucial

Among the AIA obligations for risk mitigation, the most important may be the requirement for providers of high-risk systems to have a quality management system. The scope of the quality management system must encompass:

- AIA compliance and procedures for the management of AI model modifications

- Procedures for design control and verification

- Procedures for development, quality control, and quality assurance

- Test and validation procedures before, during, and after AI system deployment

- Technical specifications and standards to be applied

- Procedures for data management

- Risk management

- Post-market monitoring, serious incident reporting, and communication with regulators

- Procedures for record-keeping

- Resource management

- Accountability framework

A comprehensive approach to quality management in general, beyond the regulatory requirements of the EU, may be the best way for companies to control AI risks related to safety, data security, and avoidance of discrimination.

Although quality management is not specifically a safety standard, adherence to it can help to ensure that robust quality control and quality assurance processes are in place, thereby maintaining and improving AI model accuracy and resilience. Further, quality management may increase the likelihood that risk control efforts prove successful. Demonstrating a rigorous commitment to product quality and safety may also speed AI adoption. Trust appears to remain a considerable hurdle to the uptake of AI tools, even if their efficacy is apparent. Best practice may include certification of a quality management system to an international standard such as ISO 9001.

The ISS ESG Corporate Rating already offers indicators that address product quality and safety. The rating separately addresses components of the product value chain, from design and development to production processes through to customer support and protection. Companies scoring higher on these metrics may be more likely to mitigate the potential negative impacts of AI.

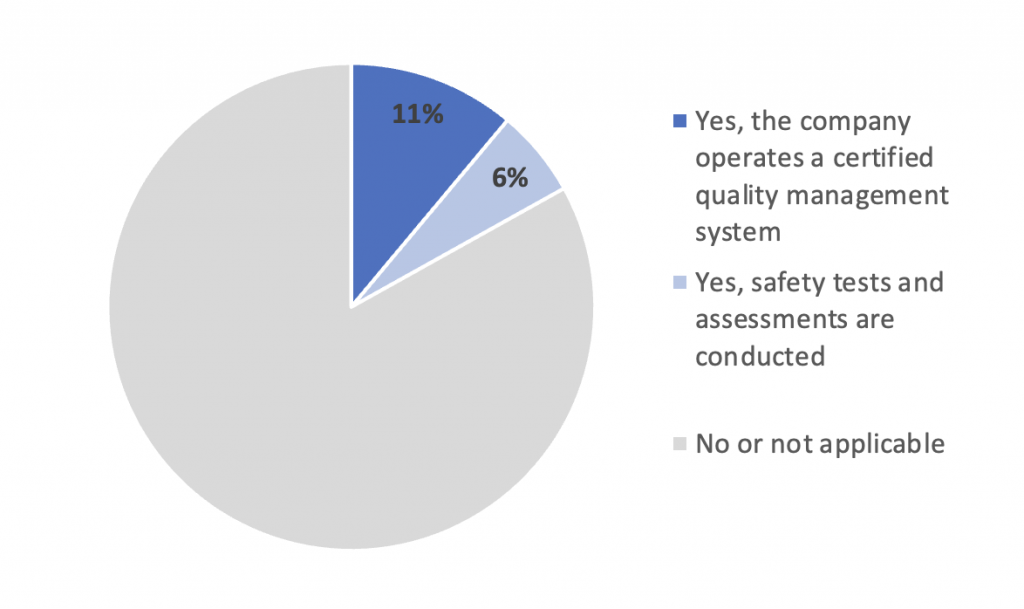

At least 1 in 10 companies in the ESG Corporate Rating universe operates a certified quality management system. Notably, approximately 45% of these companies are involved in the production of electronics, semiconductors, electrical equipment, or software. Also, approximately 1 in 20 companies conducts product safety tests and assessments even without a certified quality management system in place (Figure 3).

Figure 1: Product Safety Tests and Assessments Practices

Source: ISS ESG

Regulation and Legal Frameworks Will Adapt to AI, but Investors Can Already Assess Risks

The AIA is unlikely to be the final word on AI regulation globally. In the U.S., for example, there is a growing consensus on the need for regulation, with state legislatures already taking action to increase institutional knowledge of AI risks. California is looking to build upon the approach taken in the EU.

The risk-based approach to AI regulation already provides investors with a window into the AI-related risks and opportunities in their portfolios. ISS ESG has identified 21 of 73 ESG Corporate Rating industries as having a higher level of risk due to potential impacts on data privacy, physical safety, and discrimination. Within these industries, quality management may prove paramount to ensuring the accuracy, robustness, and security of AI systems and the products that embed them.

Explore ISS ESG solutions mentioned in this report:

- Identify ESG risks and seize investment opportunities with the ISS ESG Corporate Rating.

By:

Joe Arns, CFA, Sector Head, Technology, Media, & Telecommunications, ESG Corporate Ratings, ISS ESG

Rafael Alfonso Bastillo, ESG Corporate Ratings Analyst, ISS ESG

Harriet Fünning, Senior ESG Corporate Ratings Specialist, ISS ESG

Nat Latoza, ESG Corporate Ratings Analyst, ISS ESG

Rachelle Piczon, ESG Corporate Ratings Specialist, ISS ESG

Harish Srivatsava, Lead ESG Corporate Ratings Specialist, ISS ESG